部署GlusterFS集群

- 由gfs01.mitnick.fun,gfs02.mitnick.fun,gfs03.mitnick.fun三个节点组成GlusterFS存储集群,并将gfs01.mitnick.fun节点部署为heketi服务器。在各节点上,均使用sda用于为GlusterFS提供存储空间

- 分别在三个节点上安装glusterfs-server程序包,并启动glusterfsd服务

[root@worker1 ~]# yum install centos-release-gluster -y

[root@worker1 ~]# yum --enablerepo=centos-gluster*-test -y install glusterfs-server

[root@worker1 ~]# systemctl start glusterd.service && systemctl enable glusterd.service- 修改各节点hosts文件

[root@worker1 ~]# vim /etc/hosts

加入如下内容

10.5.24.223 gfs01.mitnick.fun

10.5.24.224 gfs02.mitnick.fun

10.5.24.225 gfs03.mitnick.fun- 在节点01上使用"glusterfs peer probe"命令发现其他节点,组建GlusterFS集群

[root@worker1 ~]# gluster peer probe gfs02.mitnick.fun

peer probe: success.

[root@worker1 ~]# gluster peer probe gfs03.mitnick.fun

peer probe: success. - 使用节点状态命令gluster peer status确认各节点已经加入同一个可信池中(trusted pool)

[root@worker1 ~]# gluster peer status

Number of Peers: 2

Hostname: gfs02.mitnick.fun

Uuid: 59905d22-494c-4ff0-b151-3a9d0a48ba29

State: Peer in Cluster (Connected)

Hostname: gfs03.mitnick.fun

Uuid: e9999498-d37b-4f85-a7b0-316fbcae4ff6

State: Peer in Cluster (Connected)部署Heketi:将gfs01.mitnick.fun节点部署为heketi服务器,以下命令均在gfs01.mitnick.fun节点上运行

- 安装Heketi

[root@worker1 ~]# yum -y install heketi heketi-client- 配置Heketi用户能够基于SSH密钥的认证方式连接到GlusterFS集群中的各节点,并拥有相应节点的管理权限

[root@worker1 ~]# ssh-keygen -f /etc/heketi/heketi_key -t rsa -N ''

[root@worker1 ~]# chown heketi:heketi /etc/heketi/heketi_key*

[root@worker1 ~]# for host in gfs01.mitnick.fun gfs02.mitnick.fun gfs03.mitnick.fun; do ssh-copy-id -i /etc/heketi/heketi_key.pub root@${host};done- 设置Heketi的主配置文件/etc/heketi/heketi.json,如下是默认配置

{

"_port_comment": "Heketi Server Port Number",

"port": "8080",

"_use_auth": "Enable JWT authorization. Please enable for deployment",

"use_auth": false,

"_jwt": "Private keys for access",

"jwt": {

"_admin": "Admin has access to all APIs",

"admin": {

"key": "My Secret"

},

"_user": "User only has access to /volumes endpoint",

"user": {

"key": "My Secret"

}

},

"_glusterfs_comment": "GlusterFS Configuration",

"glusterfs": {

"_executor_comment": [

"Execute plugin. Possible choices: mock, ssh",

"mock: This setting is used for testing and development.",

" It will not send commands to any node.",

"ssh: This setting will notify Heketi to ssh to the nodes.",

" It will need the values in sshexec to be configured.",

"kubernetes: Communicate with GlusterFS containers over",

" Kubernetes exec api."

],

"executor": "mock",

"_sshexec_comment": "SSH username and private key file information",

"sshexec": {

"keyfile": "path/to/private_key",

"user": "sshuser",

"port": "Optional: ssh port. Default is 22",

"fstab": "Optional: Specify fstab file on node. Default is /etc/fstab"

},

"_kubeexec_comment": "Kubernetes configuration",

"kubeexec": {

"host" :"https://kubernetes.host:8443",

"cert" : "/path/to/crt.file",

"insecure": false,

"user": "kubernetes username",

"password": "password for kubernetes user",

"namespace": "OpenShift project or Kubernetes namespace",

"fstab": "Optional: Specify fstab file on node. Default is /etc/fstab"

},

"_db_comment": "Database file name",

"db": "/var/lib/heketi/heketi.db",

"_loglevel_comment": [

"Set log level. Choices are:",

" none, critical, error, warning, info, debug",

"Default is warning"

],

"loglevel" : "debug"

}

}- 启动Heketi及设置开机启动

[root@worker1 ~]# systemctl enable heketi && systemctl start heketi- 测试heketi

[root@worker1 ~]# curl http://gfs01.mitnick.fun:8080/hello

Hello from Heketi设置Heketi系统拓扑

- 编写配置文件放在/etc/heketi/topology.json中

{

"clusters": [{

"nodes": [{

"node": {

"hostnames": {

"manage": [

"10.5.24.223"

],

"storage": [

"10.5.24.223"

]

},

"zone": 1

},

"devices": [

"/dev/sdb",

"/dev/sdc",

"/dev/sdd"

]

},

{

"node": {

"hostnames": {

"manage": [

"10.5.24.224"

],

"storage": [

"10.5.24.224"

]

},

"zone": 1

},

"devices": [

"/dev/sdb",

"/dev/sdc",

"/dev/sdd"

]

},

{

"node": {

"hostnames": {

"manage": [

"10.5.24.225"

],

"storage": [

"10.5.24.225"

]

},

"zone": 1

},

"devices": [

"/dev/sdb",

"/dev/sdc",

"/dev/sdd"

]

}

]

}]

}- 运行如下命令加载拓扑信息,完成集群配置。如下命令会生成一个集群,并为其添加的各节点生成随机ID

[root@worker1 ~]# export HEKETI_CLI_SERVRR=http://gfs01.mitnick.fun:8080

[root@worker1 heketi]# heketi-cli topology load --json=topology.json

Creating cluster ... ID: a2382eaaab98f3feb55961b37c7fb503

Allowing file volumes on cluster.

Allowing block volumes on cluster.

Creating node 10.5.24.223 ... ID: 2b717655a23dca54bf113dc49b2eb0a3

Adding device /dev/sda ... OK

Creating node 10.5.24.224 ... ID: 8295c7fd9819f48284251361b23d8af5

Adding device /dev/sda ... OK

Creating node 10.5.24.225 ... ID: c22123f193de86d67c1fb6a808310bb6

Adding device /dev/sda ... OK- 运行如下命令查看集群状态

[root@worker1 heketi]# heketi-cli cluster info a2382eaaab98f3feb55961b37c7fb503

Cluster id: a2382eaaab98f3feb55961b37c7fb503

Nodes:

2b717655a23dca54bf113dc49b2eb0a3

8295c7fd9819f48284251361b23d8af5

c22123f193de86d67c1fb6a808310bb6

Volumes:

Block: true

File: true- 使用 heketi-cli volume create --size=

[options]能创建存储卷

[root@worker1 heketi]# heketi-cli volume create --size=20

Name: vol_4ba7b17e5839c1e5d71a1067339941a2

Size: 20

Volume Id: 4ba7b17e5839c1e5d71a1067339941a2

Cluster Id: a2382eaaab98f3feb55961b37c7fb503

Mount: 10.5.24.223:vol_4ba7b17e5839c1e5d71a1067339941a2

Mount Options: backup-volfile-servers=10.5.24.224,10.5.24.225

Block: false

Free Size: 0

Reserved Size: 0

Block Hosting Restriction: (none)

Block Volumes: []

Durability Type: replicate

Distributed+Replica: 3-- 删除Heketi卷的命令为heketi-cli volume delete

[root@worker1 heketi]# heketi-cli volume delete 4ba7b17e5839c1e5d71a1067339941a2

Volume 4ba7b17e5839c1e5d71a1067339941a2 deleted- 一个支持动态动态存储卷配置的GlusterFS存储集群设置完成。

使用手动方式在k8s中存储卷

- 手动创建GlusterFS卷

# 在所有节点执行

[root@worker1 ~]# mkdir -p /data/brick/kube-glusterfs

# 在gfs01.mitnick.fun执行

[root@worker1 ~]# gluster volume create kube-glusterfs replica 3 gfs01.mitnick.fun:/data/brick/kube-glusterfs gfs02.mitnick.fun:/data/brick/kube-glusterfs gfs03.mitnick.fun:/data/brick/kube-glusterfs force

volume create: kube-glusterfs: success: please start the volume to access data

[root@worker1 ~]# gluster volume start kube-glusterfs

volume start: kube-glusterfs: success

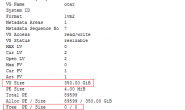

[root@worker1 ~]# gluster volume info

Volume Name: kube-glusterfs

Type: Replicate

Volume ID: 4be17a6f-3dde-402f-80e6-c9b7e6ebd164

Status: Started

Snapshot Count: 0

Number of Bricks: 1 x 3 = 3

Transport-type: tcp

Bricks:

Brick1: gfs01.mitnick.fun:/data/brick/kube-glusterfs

Brick2: gfs02.mitnick.fun:/data/brick/kube-glusterfs

Brick3: gfs03.mitnick.fun:/data/brick/kube-glusterfs

Options Reconfigured:

transport.address-family: inet

nfs.disable: on

performance.client-io-threads: off- 创建endpoints

apiVersion: v1

kind: Endpoints

metadata:

name: glusterfs-endpoints

namespace: dev

subsets:

- addresses:

- ip: 10.5.24.223

ports:

- port: 24007

name: glusterd

- addresses:

- ip: 10.5.24.224

ports:

- port: 24007

name: glusterd

- addresses:

- ip: 10.5.24.225

ports:

- port: 24007

name: glusterd- 在pod中配置

volumeMounts:

- name: glusterfsdata

mountPath: /glusterdata

volumes:

- name: glusterfsdata

glusterfs:

endpoints: glusterfs-endpoints

path: kube-glusterfs

readOnly: false本文摘自:《kubernetes进阶实战》-马哥

转载请注明:MitNick » 部署glusterfs及Heketi